In April 2025, Jones Road used AI to scrape the internet for customer insights. The output: a set of personas used as the foundation for a campaign. They then scouted real humans who embodied those personas, shot portraits of them and created some still lifes: objects and accoutrements from each persona’s life spilled onto a table, alongside Miracle Balm.

The result was it gave their team a focused, more-or-less data-driven brief to produce their new campaign. AI didn’t make the creative. Instead, it shaped the thinking and targeting.

Our Turn

A few months later, I developed explicitly AI-generated assets for Jones Road's paid media with my team at Campfire.

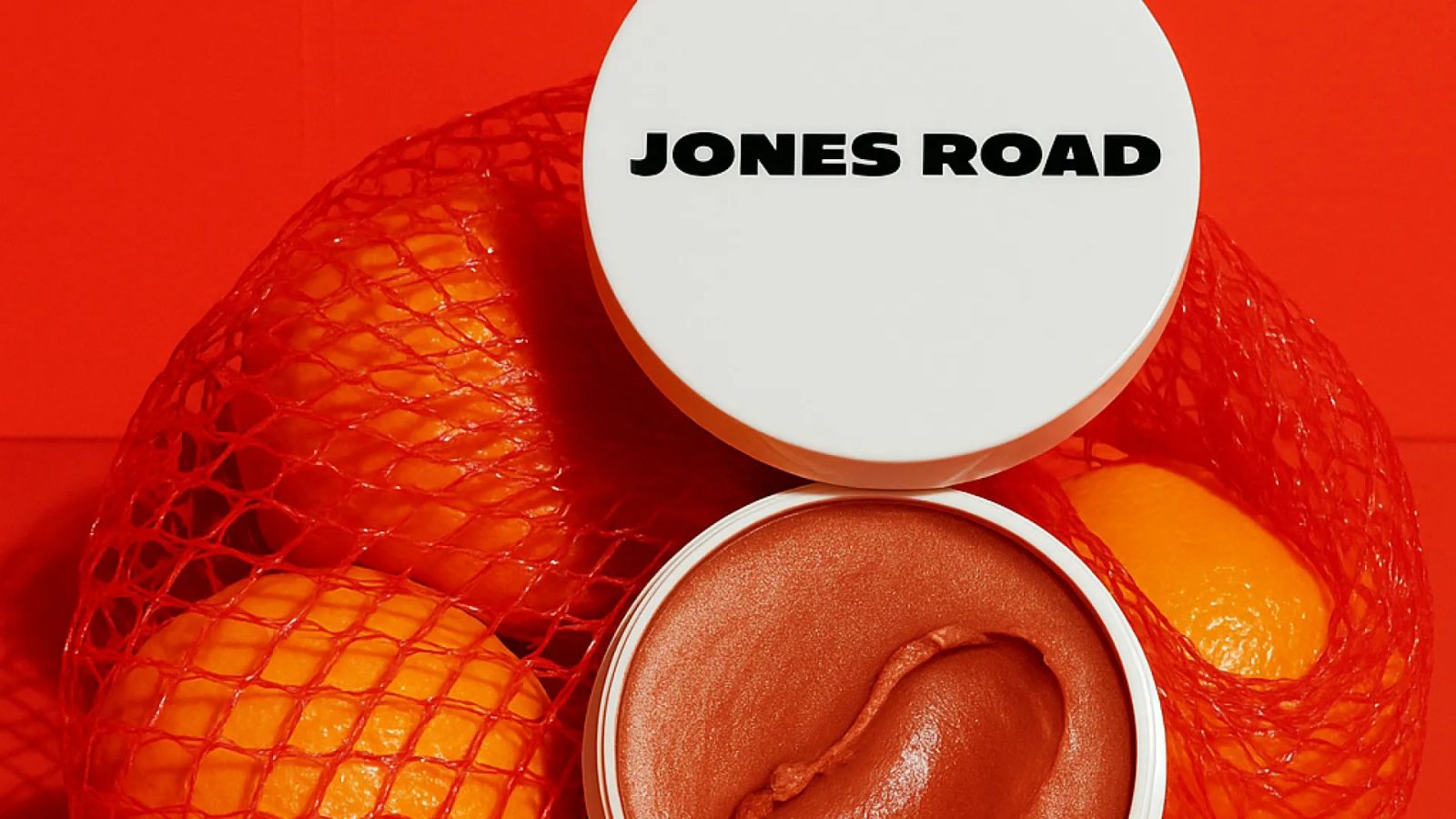

I started with ideas inspired by fashion photography, leaning into what AI is “good at” visually by referencing things like Jacquemus surreal campaigns. From there, I focused on what makes beauty products inherently hard to sense digitally: their sensory and textural qualities. I used symbolic elements - flowers, fruit, and other tactile stand-ins that suggest smell, and essence.

The generated images were beautiful, but something wasn’t landing.

“We Like Our Models”

The team wanted to use the existing, real models, instead of the AI-generated ones. This could feel like a common hesitation around AI. But I think it's deeper than that. For brands and the people who build them, every expression of that brand matters. When AI replaces weeks of human decision-making, discussion, and attachment with a single output, the work may look finished - but it hasn’t earned belief.

Why Emotional Resonance Matters

As a marketer I'm constantly putting myself in other people's shoes - I realized, the brand team has probably met their models, chose them intentionally, spent time with them on set, learned their story.

Over time, this creates thousands of small decisions made across weeks and in turn a shared belief in the work by the time it’s finished with buy-in from everyone who touched it.

What a Smart Tool Might Look Like

Some brands will always want to use their own models. But we can still imagine ways to close the emotional gap in AI generation - ways to make the work feel more earned, and more resonant.

Imagine a generation tool that makes people feel like an art director, not just a prompter - one that gives users the language of photography.

Instead of abstract prompts, it could offer concrete decisions: lenses, lighting, framing. It would explain what each choice does, and render with the realism of an actual studio. By reintroducing decision-making into the process, the tool could rebuild emotional investment through interaction, not just output.

And who knows - maybe in the process, it doesn’t just generate images, but teaches taste. Maybe it even inspires someone to pick up a camera.